SIM DALTONISM. CODE

It also ignores the non-linearity of sRGB images, which was approximately handled in the original code with a gamma function (more about sRGB in a followup post). So this approach only ensures the equivalence for these 3 colors.

SIM DALTONISM. FULL

He did so by running the full function on 3 RGB values (pure red, pure green, pure blue) to deduce the 3x3 transformation matrices. The "Color-Matrix" algorithm was developed by He converted the "HCIRN Color Blind Simulation function" that works in the CIE-XYZ color-space into a faster matrix that directly works on RGB values (that kind of optimization mattered back then). It implements two different functions, one based on the "Color-Matrix" algorithm (Coblis v1), and one based on the "HCIRN Color Blind Simulation function" (Coblis v2, the default as of October 2021).

SIM DALTONISM. SIMULATOR

The simulator now relies on the source code of MaPePeR. But digging into the history of the code is interesting and shows that its accuracy is questionable, especially in the older version. Being one of the oldest and easiest tool to test it has inspired lots of other software. I mentioned that website before because it has great introductory material, but it also proposes a CVD simulator, called Coblis. Coblis and the HCIRN Color Blind Simulation functionĪ google search for "color blindness simulation" first returns.

SIM DALTONISM. SOFTWARE

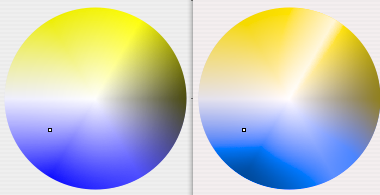

To complete this section I used the nice thread and software links compiled by Markku Laine. Let's start by looking at the most popular software and research papers. So I've decided to dig further into the history of the available algorithms and try to understand where they come from and how much we can trust them. But for the first version I had little time and copy/pasted some code for the daltonize filters, which turned out to be quite wrong. And being a mild-protan myself, I was often not very convinced by the results of existing methods as they tended to make the simulated images way too exaggerated. While developing DaltonLens I got frustrated by this as I was trying to decide which method I should implement. It is also difficult to evaluate the accuracy of the simulations, so very bad simulations can still appear reasonable to an untrained observer. However color perception theory is complex and most well-intentioned developers (like me!) end up copying existing algorithms without having a solid understanding of where they come from and which ones are best. There are many methods to simulate color blindness, and we can easily find lots of open source programs to do so. Making sense of the available models and programs So the typical pipeline consists in transforming the RGB image into LMS, applying the simulation there, and going back to RGB. The LMS color space was designed to specifically match the human cone responses and is thus the choice of the vast majority of methods. Protanopes, deuteranopes and tritanopes respectively lack or have malfunctioning L, M, or S cone cells.ĬVD simulations based on physiological experiments generally consists in transforming the image to a color space where the influence of each kind of cone cells is explicit and can be decreased or removed easily. Most color vision deficiencies can be explained by one type of cone cells not behaving properly. Their response over the light spectrum is shown below. L cones capture Long-wavelength (~red), M cones capture Medium-wavelength (~green) and S cones capture Short-wavelength (~blue). Humans with normal vision have 3 kinds of cells, sensitive to different light wavelengths. Human color perception is achieved via cone cells in the retina.

The wikipedia page, and the chroma-phobe youtube channel are a good start, but here are some key facts to follow this article: There are many online resources to understand CVD in detail. Most correction tools start by simulating how a person with CVD would see the image, and then find some way to spread the lost information into the channels that are better perceived or playing with the light intensity to restore the contrast.

0 kommentar(er)

0 kommentar(er)